- Today in AI

- Posts

- Cartesia: The SSM-Powered Platform for Ultra-fast Voice AI

Cartesia: The SSM-Powered Platform for Ultra-fast Voice AI

🤖 Meet Karan, Albert, Brandon, Arjun & Chris: the researchers building the fastest voice AI in the world

(5 minutes)

Hi 👋, this is the Today in AI Newsletter: The weekly newsletter bringing you one step closer to building your own startup.

We analyze a cool, industry-shaping AI startup every week, with a full breakdown of what they do, how they make money, how much they’ve raised, and the opportunity ahead.

Let’s get to the good stuff in this email:

💡 This startup is building the next-gen voice stack - SSM-based models that run on-device, and may have passed the turing test??

📈 They now have 50,000+ customers, including teams from Nvidia, Samsung, & ServiceNow.

🚀 They’ve raised $120M+ to date, including $100M from Kleiner Perkins, Index Ventures, Lightspeed, and NVIDIA

Before we get into it, a quick word from today’s sponsor: Delve

Turn RAG into riches with Delve’s AI for security questionnaires

Hate those endless security questionnaires? We do too. So we hired the sharpest AI brains from Stanford, MIT, and beyond—and made them do your grunt work. Delve uses state-of-the-art agentic RAG, not your average “retrieve + answer” hack.

Our agents think ahead: pull evidence, resolve conflicts, reason across your policy graph, interrogate your infrastructure, and draft bullet-proof responses.We’re helped Lovable, Bland, Micro1 and a ton of the fastest-growing AI companies navigate and close review with almost every F50 - and saved dozens of hours with our AI-native compliance platform.

Book a demo with Delve and get $1,000 off SOC 2, HIPAA, GDPR and more - and automate security questionnaires away forever. Use TODAYINAI1KOFF for 1K off.

So what’s the startup and who are the founders behind it? Here’s the story of Cartesia 📈

Cartesia was founded by Karan Goel, Albert Gu, Brandon Yang, Arjun Desai, and Chris Ré in 2023.

They build state space model (SSM) architectures for voice AI and audio, with the mission to build “enduring intelligence” - long-lived, interactive models that run in real time on any device.

Think of Transformers as librarians who keep every card on the table 📚, while SSMs are master note-takers write summaries on every book ⚡.

This lets Cartesia act like a robot brain: highly efficient, multimodal models that talk, listen, and reason with low latency and on-device privacy.

Under the hood, SSMs scale sub-quadratically, process new inputs in constant time, and love signal data like audio and video.

Where exact lookup is needed, Cartesia blends in attention - they have Hybrid stacks with a ~10:1 SSM:attention ratio.

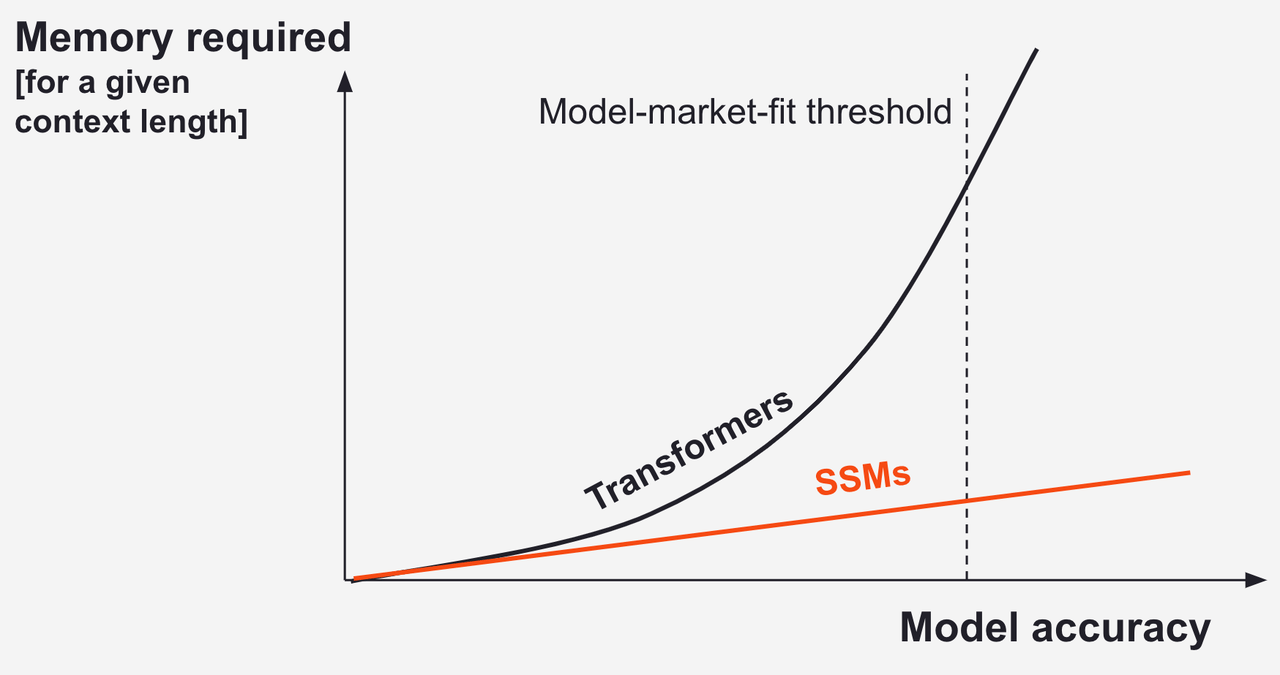

Deeper Dive into SSMs and why they’re better than Transformers for audio 🔊

Transformers attend to every past token, store huge KV caches, and pay a quadratic tax as context grows.

SSMs stream inputs, update a compact state, and toss the raw tokens - so inference stays fast no matter the history.

For audio and video, which are highly compressible, SSMs are much better and more scalable.

Where precise retrieval is needed, Cartesia adds attention but keeps it light - around 10:1 weighted toward SSMs.

The result is fuzzy compression where it helps, exact lookup where it matters, and constant-time steps that run on a phone or laptop.

That’s how you get a model that listens, reasons, and speaks without the data-center bill.

Backstory 👀

Karan Goel grew up in New Delhi, India and watched his entire family hustle.

He earned a dual degree from IIT, ranked in the top 0.1% of India, and realized it’s not enough for him.

Growing up, he’d always been a serious gamer - he competed in Counter Strike nationals which distracted him from school. This nudged him toward Reinforcement Learning because of the rapid, sequential decision-making.

He then decided to attend Carnegie Mellon to build AI.

Karan won the $35K Siebel Scholarship and continued to be in the top quartiles at Carnegie Mellon.

Then he moved to Stanford for a PhD, joined the Stanford AI Lab under Prof. Chris Ré, to dive deeper into the algorithms.

He started building in AI from scratch, did research at Salesforce AI Research, got into the Greylock X Fellowship, and learned AI infrastructure from every angle.

He had a new goal: build real-time, interactive intelligence.

Meanwhile, Albert Gu pushes a new wave of alternatives to Transformers: SSMs., He earned a reputation as the SSM mind and took a faculty role at CMU.

The 2 paths crossed over a shared obsession: faster, longer-context sequence models.

By 2020, the spark becomes a blaze. Karan helped invent State Space Models (SSMs) and published “It’s Raw! Audio Generation with State-Space Models.”

He finished the PhD thesis. He could now join any lab or any company - instead the call is, “we’re starting a company.”

At the same time, Karan, Chris and Albert see a once-in-a-generation chance: SSMs process raw audio “amazingly” well in SaShiMi, and the world needs realtime.

They spin out in 2023, keep the research-first DNA from Stanford, and aim straight at audio - a perfect match for streaming, compression, and latency.

The team starts building Cartesia to disrupt Voice AI and raise a $22M seed from top VCs and angels.

The Hustle 🚀

Cartesia launched Sonic - the world’s fastest TTS in 2024 and went after latency-sensitive voice use cases first.

Why? Because milliseconds matter in contact centers, agent handoffs, and live dialog where turn-taking must feel human.

10,000+ customers signed up including Quora, Cresta, Rasa, and others that year.

By March 2025, Cartesia raised a $64M Series A led by Kleiner Perkins.

They also shipped Sonic 2.0, cutting latency from 90 ms → 45 ms to become the fastest voice AI in the world.

By August 2025, State Space Models go mainstream and Cartesia launches Line - a voice agent development platform, making it easy for anyone to build voice AI.

Fast forward to today, Cartesia just shipped Sonic-3, a streaming TTS with 42 languages, that emotes, and lands tricky acronyms/IDs with enterprise-grade accuracy.

It also has Instant Voice Cloning with 3 seconds of audio lowered onboarding friction to near zero.

Underneath, they kept building the hybrid SSM stack, focusing on constant-time inference, edge deployment, and on-device privacy.

Stats 📊

Fast forward to today, Sonic-3 can be streamed in 42 languages, including 9 Indian languages, with exceptional Hindi performance.

It delivers ~190 ms end-to-end; TTFA ~90 ms today; internal testing hit 25 ms, with a 12 ms synthesis target.

In blind tests, Sonic-3 was preferred 62% vs 38.6% for ElevenLabs. Check out this comparison video:

Are we passing the Turing test for voice?

— Karan Goel (@krandiash)

2:26 AM • Oct 29, 2025

Cartesia now has over 50,000 customers including teams from Nvidia, Samsung, & ServiceNow. They use the app for customer support, sales, interview screening and more.

They now have a team of more than 50 people, roughly 40% research / 40% engineering / 20% GTM & ops.

💰️ On October 28th 2025, Cartesia announced that they’ve raised $100M from Kleiner Perkins, Index Ventures, Lightspeed, and NVIDIA, bringing their total raise to ~$122M. This is likely at a unicorn valuation.

Before You Go 👋

What did you think of this week's post? |

Thank you for reading. Know someone who’d love this newsletter? Share the love. 🌟

🚀 Building something in AI? Get featured in front of 95,000+ founders, builders, and investors 👉 [email protected]